Spotlight on:

Reducing global risks

From driving research to producing policies to improving systems, we look for the most promising ways to prevent and manage the most severe threats the world faces.

By funding our recommendations, our members have helped enact better government policies, produce cutting-edge research, and build up the world’s crisis response mechanisms and infrastructure.

The impact our community has helped create includes:

We enabled the Pacific Forum to restart the only Track 2 diplomatic dialogues between the US and China focused on strategic nuclear issues, allowing the group to meet in-person for the first time in five years. We have extended our support for this critical work into 2025.

Blueprint Biosecurity is leading critical work on the next generation of biodefense, including advanced personal protective equipment and germicidal UV light.

The Berkeley Risk and Security Lab published new work identifying policy blindspots in the governance of frontier AI models. In early 2024, they presented the first public evidence of how leading Chinese AI labs evade US export controls on advanced AI chips, and its implications for the future of hardware-centric governance.

The International Biosecurity and Biosafety Initiative for Science (IBBIS) launched the Common Mechanism, an open-source, globally available tool for DNA synthesis screening which will help prevent the misuse of synthetic DNA, and are working to uncover vulnerabilities and gaps in current screening practices.

Defusing great power competition

Our Global Catastrophic Risks (GCR) Fund focuses on defusing great power competition and building up international capacity for cooperation.

Growing tensions between the US, China, and Russia exacerbate the potentially catastrophic risks we face as a species—nuclear war, extreme pandemics, and even the potential arrival of artificial superintelligence.

Through the Fund, we've:

- Become a leading funder of backchannel diplomacy, crisis communications, and work to support international governance and treaties.

- Launched and strengthened US-China diplomatic efforts on extreme risks.

- Advocated for cost-effective personal protective equipment in national stockpiles.

$5.1M

Contributed into the Fund

$2.1M

Granted to high-impact opportunities

12

Organizations and projects funded

We are at a dangerous time in the international security landscape, and no country can navigate the risks of the coming years alone. Tensions between the world’s great powers can undermine efforts to prepare for upheaval and instability.

The GCR Fund is stepping in to help humanity navigate these transformative years—including risks from rapid advances in AI and the specter of an AI Manhattan Project—more effectively. We identify and support the best projects to catalyze global cooperation on extreme risks. When these projects don’t exist, we create them.

Christian Ruhl

Global Catastrophic Risks Research Lead & Fund Manager

Establishing the Conclave on Great Powers and Extreme Risks

Think tanks, peace institutes and other organizations regularly host unofficial diplomatic dialogues where civil society actors—including retired politicians, diplomats, senior military veterans and other experts and practitioners—discuss global security. In the US, these efforts are not centrally organized, creating a highly fragmented landscape where some work is duplicated while other topics slip through the cracks.

In 2024, after working with current and former policymakers and learning about their frustrations with the lack of coordination and poor visibility into the broader ecosystem of backchannel diplomacy, we collaborated with the US National Academy of Sciences to co-design and fund a new Conclave on Great Powers and Extreme Risks, leading to a grant of $429K.

The Conclave will serve as a first-of-its-kind forum, co-ordinating policy stakeholders of track 1.5 (‘semi-official’) and track 2 (‘unofficial’) dialogues between representatives from the great powers, namely the US, Russia, and China. This twice-yearly forum will allow policymakers to better survey the global picture, identify emerging patterns, and encourage collaboration among and between government and civil society actors.

▲ Photo by Michael Descharles on Unsplash

Improving AI cooperation with CEIP

The world is racing towards advanced AI (or artificial general intelligence, AGI), and great power competition could have disastrous, dangerous consequences which are largely being ignored.

As AI safety takes a back seat, many analysts expect that major public-private partnerships on AI may develop as the strategic advantage of superintelligence becomes clear to the world’s militaries. A report to the US Congress in November 2024 recommended that “Congress establish and fund a Manhattan Project-like program dedicated to racing to and acquiring an [AGI] capability.” In January 2024, the Stargate – a $500B AI infrastructure project – was announced, heightening concerns.

To help address this problem, in December 2024 we began working with the Carnegie Endowment for International Peace (CEIP) to develop a project to improve decision-making on AI safety, coordinating a grant of $300K from a syndicate of donors and the Fund.

Focused on the catastrophic risks of AI competition between the US and China, CEIP will work with AI labs and government officials to inform policy, host track 2 dialogues on extreme risks from frontier AI, and integrate AI safety into future large-scale public private partnerships.

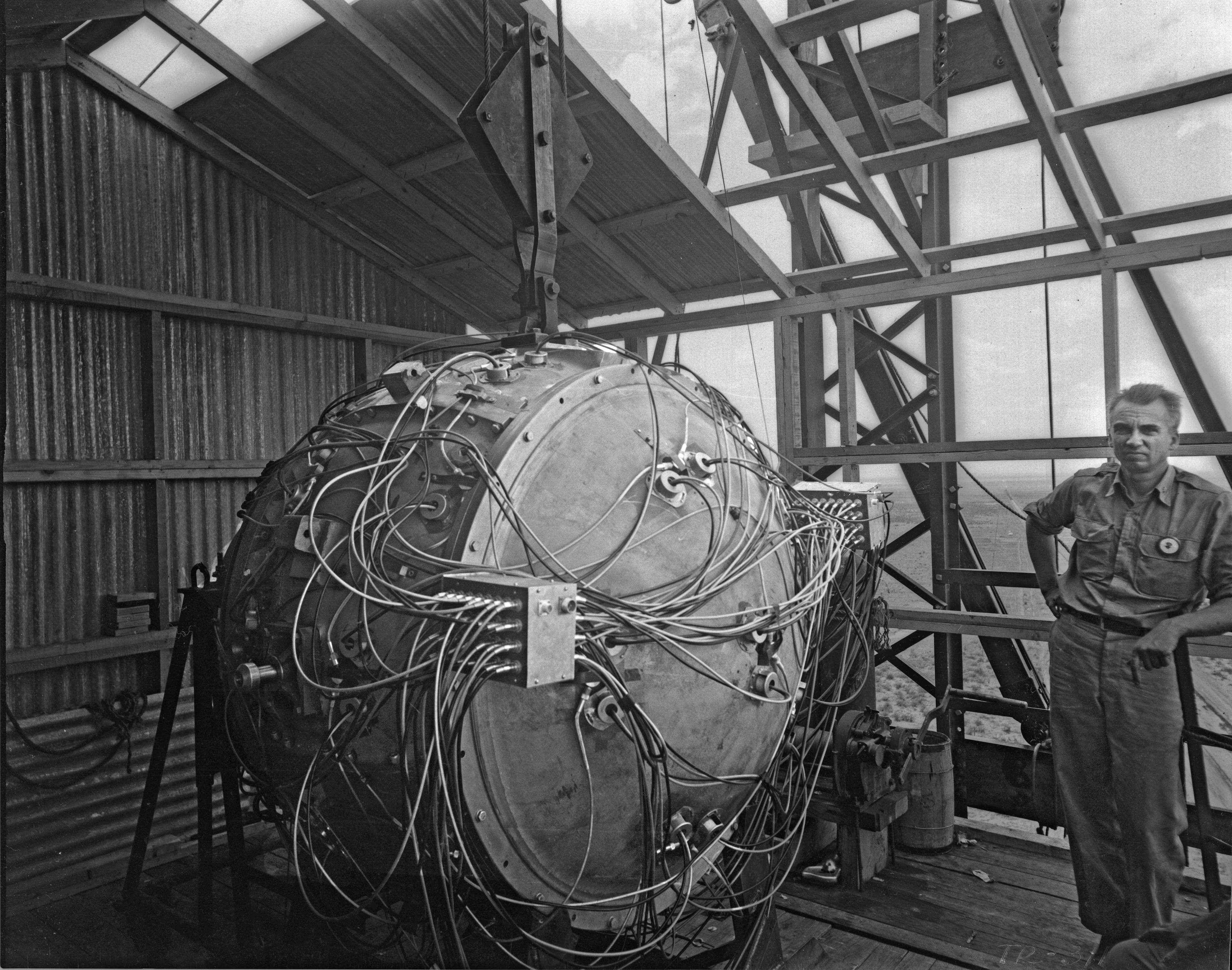

▲ A Manhattan Project scientist beside the assembled bomb. Nationalized AI projects could lead to catastrophically-unsafe AI. Photo from Wikimedia Commons.

Launching Global Shield

Working together, six Founders Pledge members and the GCR Fund supported the creation of Global Shield, a first-of-its-kind international advocacy organization.

With a grant of $550K, we responded to an urgent funding gap, allowing the team to continue their work and launch the organization.

Global Shield hosted a policy summit on global catastrophic risk in September 2024. Members of Congress, as well as representatives from FEMA, RAND, and other organizations, met to discuss the implementation of the Global Catastrophic Risk Management Act (GCRMA, 6 U.S.C. § 821) and to explore how Congress can further advance global security and risk management.

After the summit, RAND published its assessment of existential and global catastrophic risk, forming the basis for the US Department of Homeland Security’s own report to Congress. The findings in RAND’s report emphasized that there is considerably more the US government needs to understand and manage this risk.

Global Shield also worked with Congress to mandate a new review of the adequacy of continuity of operations (COOP) plans and programs. Adequate COOP plans and programs are the first, most critical cornerstone to building national resilience to all-hazards. The current US plans are outdated and therefore need major revision to reflect modern risks.

▲ Photo by Jason Leung on Unsplash